Surely many of you have come across a popular opinion: they say that all video formats provide 24 frames per second, which corresponds to the perceptual properties of the human eye. In fact, this generalized thesis is a consequence of a number of misconceptions and myths. It is around this characteristic of the transmitted image that over the past two to three years there has been a small revolution in standards, affecting many areas - from the home TV market to film production.

⇡ Our eyes

First of all, the capabilities of our visual organs are by no means limited to the notorious 24 frames/s. It is generally difficult to express the “analog” eyes of people with an exact number, but the approximate limit, depending on the properties of individual individuals, ranges from 60 to 200 frames/s. Of course, we perceive visual information with some “inertia,” but it is still possible to train yourself to notice extremely fast details - for example, airplane pilots have traditionally excelled in this matter. There is also a difference between normal vision and peripheral vision - when looking “out of the corner of the eye” at a monitor with a cathode ray tube, some flicker is noticeable, which is not noticeable when in direct contact with the screen.

Another example that many people understand is video games. Try to play some new first-person shooter on a computer with an average configuration - you will see the “brakes” in all its glory. Using a special program (Fraps), you can measure the current frame rate on the display. A comfortable minimum FPS, at which control is required to be smooth and the user finally stops noticing image stuttering, is at the level of 45-50 frames per second. Well, if the video is transmitted at a speed below 25-30 FPS, then playing, as a rule, becomes almost impossible. And the difference between 24 fps and the ideal value of 60 fps is noticeable to any sighted person.

Perhaps someone will now remember the famous 25th frame, an old horror story and supposedly universal tool that unscrupulous companies use to increase sales. In 1957, the idea of a hidden frame, which directly affects the subconscious, was put forward by the American James Vickery. But five years later, the author of the dubious project himself admitted that all this was nothing more than fiction and did not affect the amount of sales. Actually, this very 25th frame, if you look closely at the screen, will be quite noticeable to the eye; you can even have time to read short words or remember pictures and patterns. And, of course, there is no talk of any special impact on the subconscious.

However, after the breakup Soviet Union The domestic press, with incomprehensible persistence, took up the promotion of the myth of the 25th frame and worked so hard that even now many of our citizens sincerely believe in this method of manipulating consciousness. And even government authorities in Russia and Ukraine adopted special bills limiting the use of hidden advertising technologies (for example, Article 10 No. 108-FZ “On Advertising”).

⇡ In cinema halls

It all started with silent cinema, which used film with 16 frames per second. When showing excerpts from pre-war films, you probably noticed an unnaturally high speed of what was happening on the screen - this is a consequence of the corresponding frame rate. Then, when sound appeared in films, the number of frames was increased to 24 to accommodate the audio track. (otherwise the sound was too distorted), this meaning remains relevant to this day.

However, to be precise, cinemas show films not with 24, but with 48 frames per second. This is due to the operation of one of the parts of the projector, the shutter - a mechanical device for periodically blocking the light flux as the film moves in the frame window. That is, roughly speaking, every second frame is simply “empty”, and flickering is almost imperceptible. But even with the same information content of 24 and 48 frames/s, the latter format is much more comfortable for human perception. Thanks to the “inertia” of the perception of visual information by our eyes, the shutter eliminates “jerks” when moving from one frame to another.

Nevertheless, in cinema there has been talk for decades about the need to switch from the usual standard of 24 frames per second. But this was hampered by a number of problems, mainly related to technological difficulties. However, in last years, when films began to be increasingly shot and shown in theaters using digital equipment, the task in this regard became significantly easier.

But there is one more aspect regarding the cinematography of the video sequence. For example, at 60 fps our eyes receive more information, which changes the perception of what is happening on the screen. The artificiality of the scenery and visual effects becomes noticeable, giving the impression that you are present at a theatrical production or right in the studio where the film is being filmed. This negatively affects the authenticity of the film, often negating some directorial and camera techniques. But all this does not in the least cancel out all the positive properties that video with a high frame rate has. This is the amazing smoothness of the image and the naturalness of the picture - just like in real life, which creates an excellent effect of presence and belief in what is happening. And finally, a larger number of frames eliminates flicker (especially noticeable at the edges of the screen), reducing eye fatigue.

James Cameron, the main film innovator on our planet, who made the whole world fall in love with 3D, seriously promised to make another revolution in the industry. His next projects " Avatar-2" And " Avatar-3 "will be filmed in 60 frames per second and will clearly demonstrate to humanity all the advantages of such technology. However, Peter Jackson with his " Hobbit"was going to get ahead of the director" Titanic“- at the end of this year we will be able to watch a film based on Tolkien’s novel with 48 full frames per second.

⇡ At your home

With television, things are a little different. There are three common television broadcast formats in the world: NTSC, PAL and SECAM. Each has its own frequencies, video transmission properties and is found in strictly defined regions. NTSC is an American standard that provides 30 fps. Technologically similar PAL and SECAM are used in other parts of the world and provide 25 frames/s.

As with the shutter in film, the number of frames in television broadcasts should be multiplied by two. This is due to the use of interlaced scanning (interlace), when one frame is divided into two half-frames, each of which consists of either even or odd lines. As a result, the on-air image appears quite smooth, which is not surprising at 60 or 50 fps for NTSC and PAL/SECAM, respectively.

If you watch the same film on a large TV from a DVD and on television, you will easily notice a fundamental difference in the image. During television broadcasting, the picture will be more natural and even somewhat similar to theatrical production. A reverse experiment: try buying a DVD of a football or hockey game. The athletes will move somehow more sharply, and the broadcast will surprise you with an unusual “raggedness,” which is especially noticeable when the camera moves horizontally along the stadium. Digital formats like DVD or Blu-Ray use traditional 24 frames per second without shutters or interlaced frames, so on large TVs in panoramic scenes it is easy to notice annoying image jerking, particularly at the edges of the screen, due to the characteristics of peripheral vision.

Unfortunately, digital media with 48, 60 or 100 frames per second are not yet in a hurry to enter our homes. Even the Blu-Ray edition of the upcoming “The Hobbit” has already been announced in the usual 24 frames/s standard, which, in general, is logical - video players simply cannot play other formats. But you can enjoy the beauty of high frame rates with the help of modern TVs that support image smoothness technology.

The company became a pioneer in this area Philips with its patented system Digital Natural Motion, which allows you to display 100 frames per second. Then other manufacturers caught up, each giving the same concept its own name: Motion Plus at Samsung, Motionflow at Sony, Trumotion at LG And Motion Picture Pro at Panasonic. Operating principle in general outline It’s quite simple: between the original informative frames, the TV’s video processor inserts intermediate frames that provide high clarity and smooth transition. According to manufacturers, some devices now have frequencies of up to 400 and even 800 Hz, that is, several hundred artificial frames per second are calculated. Such high values, in fact, are only useful when transmitting high-quality 3D; for ordinary video, 120 frames/s is already more than enough. However, if you use it at home for a long time, you will notice a number of inconveniences associated with the operation of the “flash traps” on your TV.

Firstly, a fairly common problem is connecting to your computer. For example, Samsung LED panels prefer that the frequency of the incoming signal exactly matches the number of frames per second in the video file being played. That is, the video card, as a rule, produces 60 Hz, and the BD-RIP you downloaded contains the traditional 24 frames/s. When displaying a picture on a TV, twitches and artifacts will appear every few seconds - the Motion Plus system will try to calculate additional frames based on the 60 available ones, while there are only 24 of them in the film itself.

You can force the video card into 24 Hz mode, but then you will be forced to deal with the slow operation of the operating system interface, and twitching for unknown reasons (in the case of LED panels from Samsung) will never completely disappear. Therefore, you will get better results by using a Blu-Ray/DVD player (Sony PlayStation 3- an excellent option) or an HD media player - there should be no problems with such devices.

Secondly, even new technologies for calculating additional frames in the most sophisticated LED panels sometimes make mistakes. In some scenes you will notice artifacts and plumes. This happens especially often in episodes where the object is on close-up quickly moves along the screen.

And thirdly, not all content benefits from added fluidity. Of course, this is useful for films and cartoons in 3D - then the volume appears more saturated. Systems for calculating new frames are also good for films where panoramic shots predominate and the level of detail is high, like the same “Avatar”, “ Trona: Legacy" or " Pan's Labyrinth" And all this is perfect for documentaries, TV series or sports broadcasts. On the contrary, with a smooth effect it is almost impossible to watch some categories of films with a deliberately “shaky” camera, such as “ The Bourne Ultimatum», « Monstro"and a number of action films - with additional footage, what is happening on the screen looks like a mess with artifacts.

Finally, fourthly, as we said above, sometimes adding realism and theatricality through smoothing systems turns certain films into laughable performances. You can immediately see the poorly drawn backdrops, mediocre special effects added during post-production, and other joys. If you want to see for yourself, turn on the latest “ resident Evil"on an advanced LED panel, " Spider-Man"Sam Raimi or something" Hulk" Well, there’s nothing to say about old films - when watching classic “ Star Wars“You will see with your own eyes that all the spaceships are indeed plastic models, filmed in a room with black wallpaper.

By the way, if someone suddenly came up with the idea that systems for calculating additional frames would help get rid of slowdowns in games, this, of course, is not the case. The controls will become somewhat “wobbly” - the image will react with some delay to the player’s actions. In general, it is impossible to play with the floater turned on.

Therefore, systems for adding smoothness have quite a lot of ideological opponents who complain about the loss of cinematic quality in some films. And such people are quite understandable. This leads to a simple conclusion: you need to use “floaters” very selectively, depending on the content being played. However, in general, the existence of such technologies is completely justified - in cases where it is really applicable, the picture on the TV screen will simply give you pleasure.

⇡ Total

All the words written above and the examples mentioned are nothing compared to your personal impressions. If you are a frequent visitor to cinemas, then in the foreseeable future you will see for yourself the advantages of 48 or 60 frames per second - Peter Jackson and James Cameron will find ways to demonstrate the advantages of the technology in all its glory.

If you are considering buying a new TV (or suddenly your home panel already has similar capabilities), then you should pay attention to the availability of systems for adding smoothness. You can ask salespeople in a hypermarket to turn on demo mode on the model you are interested in, preferably a dynamic trailer for a movie or a 3D image. Based on the results of viewing, you can draw your own conclusions.

In the beginning, film was very expensive - so much so that in order to save it, directors tried to use the smallest number of frames that ensured smooth movement. This threshold ranged from 16 to 24 frames per second, and ultimately a single level of 24 frames per second was selected. This standard has been established for many decades and is still used in cinematography.

When television appeared different countries began to use different numbers of frames per second, depending on the frequency of the AC voltage in the electrical network. Thus, there was a split in world standards. Countries that had a voltage frequency of 60 Hz, such as the United States and Japan, decided to introduce 30 frames per second television, while countries with a frequency of 50 Hz (mainly in Europe and Asia) chose the 25 frames per second standard.

The digital era has brought enormous technological changes. First, most cameras and displays can support several different recording speeds, so you can continue to use all the old frame rate standards. Secondly, new opportunities have appeared. High Definition (HD) and Ultra High Definition (UHD or popularly 4K) specifications use 60 frames per second, which allows developers to record more dynamic films, and even create high-quality illusions of three-dimensional images.

How many frames to choose

Selecting the number of frames depends on the creative vision and the effect you want to achieve. A slower speed causes the brain to subconsciously recognize that the image being viewed is “fake,” so choosing 24 frames per second can do a great job of emphasizing imagination-based concepts, such as in fairy tales and other unrealistic films.

The higher the frame count, the more realistic the scenes look, so this speed is ideal for modern feature films, documentaries or action films. While 60 frames per second is technically the best solution for achieving smoothness, stop-motion animations look great at 12 frames per second, and seeing the ball during a match recorded at 24 frames per second is almost impossible.

Often developers try to stick to the frame rate traditionally used in their region, i.e. 29.97 fps in the US and Japan and 25 fps in Europe and most of Asia. Make sure your choices are thoughtful.

Remember that the human eye is a complex device and does not recognize individual frames, so these recommendations should not be considered as scientifically proven facts, but rather as the result of many years of observation by different people.

Below you will find information about common frame numbers used in films and music videos:

- 12 fps: the absolute minimum required for movement to occur. Lower speeds will be perceived as a collection of individual images.

- 24 frames per second: The minimum value at which the movement appears reasonably smooth. This is a good option that is suitable for creating the atmosphere of an old film.

- 25 fps: TV standard in EU and most Asian countries.

- 30 fps (29.97 to be exact): Standard used in the USA and Japan.

- 48 fps: The value is twice that of traditional films.

- 60 fps: Currently the most advanced recording speed. Most people don't see much difference in the smoothness of motion when shooting above 60 fps. This number of frames is great for displaying dynamic action.

Animation at 12 frames per second

This movie, shot at 12 frames per second, shows what effect can be achieved with low frame count recording.

California at 60fps

This movie Shot at 60 frames per second, it has a clearer and smoother image. He is the complete opposite of the previous example. Remember that you need to change the video quality settings in YouTube to 720p or 1080p (by clicking on the gear icon in the YouTube player).

High frame rate is the best solution for YouTube

Until recently, the maximum number of frames on YouTube was 30 frames per second, but currently it is already possible to view 60 fps video(and also, of course, with 48 and 50 frames per second).

Developers love creating animations and video from games at 60 frames per second because this speed allows for dramatic images from the game console to be displayed at a high frame rate—resulting in sharper, smoother footage.

Live broadcasts can also be shown at a higher number of frames per second. When will you start streaming? live on YouTube at 60 frames/sec, the system re-encodes the stream to standard 720p60 and 1080p60. This ensures the image is smooth enough for presentations, games and other dynamic materials.

Record in 60 frames per second format may also be used in more general films. When shooting panoramic videos, recording at 60 frames per second helps keep your movements clear and smooth, but turning the camera too quickly at slower frame rates can cause image instability or loss of focus. This happens because when recording at a lower frame rate, such as 24 fps, the camera's shutter stays open longer, resulting in motion blur. And at 60fps, you can record natural-looking pitches and reduce aperture opening times for crystal-clear images.

A high frame rate can also be useful during darkening and dodging of images, where lower frame rates may result in loss of image quality.

Of course, you shouldn't use one fixed frame rate for the entire movie. For example, you can choose 24 fps to get a romantic effect, and then switch to 60 fps when needed:

- Explosions: Movie explosions shot at 24 frames per second appear either clear but choppy or blurry but smooth. With more frames per second, very fast explosions can be displayed in detail, with great smoothness and clarity.

- Liquids: High frame rates give you advanced aperture control when shooting fast-moving liquids.

- Dynamic scenes: for example, boxing, wrestling, etc.

- Gunshots and other fast moving objects: Motion blur at lower frame rates makes it impossible to track fast moving objects. In scenes shot with a large number of frames per second, this problem does not occur.

You don't have to choose between blur and low detail

In scenes with fast action and a lot of small, moving objects, such as this Nintendo clip, frequency in 60 fps allows you to capture all the smallest details while maintaining extraordinary smoothness of the image.

Do it

- Record a one-minute video with a large number of frames and then a small number of frames. Share this post with the community and ask members what they liked about these films.

PC Gamer Editor Alex Wiltshire talked with neuroscientists and psychologists to find out how many frames per second the human eye and brain need in games. The answer to the question turned out to be difficult.

Many gamers know that in games it is important not only the number of frames, but also the stability of their arrival: for example, even 30 frames can be perceived much more pleasant than “chattering” in the range from 40 to 50.

This is due to the fact that drawdowns in some scenes are perceived as those notorious “brakes” (the brain expects to see a certain movement with the same smoothness as the others, but the computer does not have time to process the image at the required speed).

Therefore, sometimes developers who have not paid enough attention to optimization release a game with a limit of 30 frames even on PC, which usually causes noticeable indignation among gamers. And for console games without multiplayer mode, 30 frames is generally the standard.

However, in his study, Wiltshire only touched on stable frame rates and did not touch on the issue of vertical synchronization and other computer parameters that affect the perception of the picture.

Eyes and brain work in tandem

The debate about how many frames per second the human eye can perceive has been going on for a long time, largely because there is no clear answer to this question.

As Wiltshire notes, a person does not read reality like a computer, and visual perception is entirely based on the joint work of the eyes and brain. Therefore, for example, people see movement and light differently, and peripheral vision is better at some aspects of a picture than primary vision - and vice versa.

The time it takes a person to perceive visual information is summed up from the speed of light entering the eye, the speed of transmission of the received information to the brain and the speed of its processing.

According to psychology professor Jordan DeLong, when processing visual signals, the brain is constantly calibrating, calculating averages from thousands and thousands of neurons, so the entire system is more accurate than its individual components.

As researcher Adrien Chopin notes, the speed of light can hardly be changed, but it is quite possible to speed up the part of visual perception that takes place in the brain.

Games are perhaps the only way to significantly improve the main indicators of your vision: sensitivity to contrast, attention and the ability to track the movement of many objects at the same time.

Adrien Chopin, cognitive brain researcher

As Wiltshire points out, it's the gamers who are most concerned about high frame rates who are able to process visual information faster than anyone else.

Differences in the perception of movement and light

If the light bulb operates at a frequency of 50 or 60 Hz, the lighting appears constant to most people, but there are those who notice flickering in this case. This effect can also be achieved by turning your head while looking at the car's LED headlights.

At the same time, some fighter pilots during tests could see images that appeared on the display for 1/250th of a second.

However, both of these examples do not speak to how the human eye perceives games where movement is the main parameter.

As Professor Thomas Busey notes, at high speeds (latency less than 100 milliseconds), the so-called Bloch's law comes into play. The human eye is unable to distinguish a bright flash that lasted a nanosecond from a less bright flash that lasted a tenth of a second. A camera works on a similar principle, which can let in more light at a slow shutter speed.

However, Bloch's Law does not mean that the limit of human perception stops at 100 milliseconds. In some cases, people can discern artifacts in an image at 500 frames per second (2 millisecond delay).

As Professor Jordan DeLong notes, the perception of movement largely depends on the position a person is in. If he sits still and watches the object, then this is one situation, but if he goes somewhere, then it is completely different.

This is due to the differences between primary and peripheral vision that people inherited from their primitive ancestors. When a person looks directly at an object, he sees the smallest details, but his vision does not cope well with fast-moving objects. Peripheral vision, on the other hand, suffers from a lack of detail, but is much faster.

This is exactly the problem that the developers of virtual reality helmets faced. If 60 or even 30 Hz is quite enough for a monitor that a person is looking at directly, then in order for the viewer to feel normal in VR, the frame rate must be increased to 90 Hz. This is because the helmet also provides a picture for peripheral vision.

According to Professor Busey, if a user is playing a first-person shooter game, then the increased frame rate generally allows him to perceive the movement of large objects better than small details.

This is due to the fact that during the game the gamer does not stand in one place, waiting for enemies, but moves in virtual space using the mouse and keyboard, also changing his position relative to opponents who may appear in different parts monitor.

How much to hang in frames

Scientists have differing opinions about how many frames per second a person needs. Professor Busey believes it's worth going at least past the 60Hz mark for comfort, but he doesn't know if going between 120 and 180fps will make a difference for some people.

Psychologist DeLong believes that a frame rate above 200 frames will be perceived by any viewer as real life, however, he is convinced that after 90 frames the difference for most people becomes minimal.

Researcher Adrienne Chopin sees things differently. Yes, the more frames the better, however human brain stops receiving useful new information from the picture at a frequency above 20 Hz. According to the scientist, in order to fix a small object, the brain needs even less.

When you want to perform a visual search, track multiple objects, or figure out the direction of movement, your brain will capture approximately 13 frames per second from the total stream. To do this, it calculates a certain average value from a number of neighboring frames, composing one of them.

Adrien Chopin, researcher

Chopin is convinced that there is no point in transmitting information above the 24 frames per second adopted in cinema. However, he understands that people see a difference between 20 and 60 hertz.

Just because you see a difference doesn't mean you'll become a better player. After 24 Hz, nothing will change significantly, although you may feel the opposite.

Adrien Chopin, researcher

What scientists agree on is that high frame rates have more of an aesthetic meaning than a practical one, and they do not believe that games should be developed in this direction.

Chopin is convinced that developers should think more about increasing resolution, and DeLong would like monitor and TV makers to think about how to achieve maximum contrast in the picture.

Developers talk about the difficulty of choosing between increasing resolution and frame rate in games.

So-called " graphic arts" has always been, is and will probably be the main factor in all disputes among gamers. Often participants in the debate on the topic "this game/console is better" argue in favor of their point of view, using dry numbers, and to prove the visual superiority of this or that another project/console, provide data on the frame rate per second ( fps) and resolution ( resolution).

But what these terms actually mean is something that few participants in this debate know exactly. What is the difference between 720r and 1 080r, or between 30 fps And 60 fps?

Let's first define what these above concepts mean.

Frame frequency

Standard video and TV broadcast is a large number of static images that are combined in a certain sequence and quickly played one after another. " Frame" - this is one of these very images, and " frame frequency" is a value that shows how often frames change to form movement. Specifically for our case, for video games, frame rate is the value of how often the game changes frames on the screen/monitor. This value is measured "frame rate per second" ( frames per second, fps) .

In order for the player to see movement in the game, frames must change very often. How often, you ask? For example, in films the standard is 24 fps(frames per second), for games developers try to make stable 30 fps. If it is less, then the game becomes jerky and it becomes uncomfortable to play. It's like listening to a music cassette with small sections of the tape cut out.

Frame rates in games and on monitors are different values. Monitors have their own frequency, which means how often the monitor changes its image. It is measured in hertz ( Hz), where 1 hertz ( Hz) - this is one cycle of changing the picture. The vast majority of modern monitors operate at 60 Hz, so optimally the game should also work at 60 fps. To make it easier - a standard TV that updates the image when 60 Hz will show all 60 frames from 60 fps in one second.

So some games may have an issue with most displays if they don't work when 30 or 60 fps. The point is that if the game ignores these values ( 30/60 fps) and runs at as many frames per second as it can handle ( PC-gamers are probably familiar with such an option as " V-Sync off"), it causes "screen tearing" in this case, several frames are visible in one frame. Including V-Sync(vertical sync), you force the game and monitor to run at the same frequency and avoid " screen-teering".

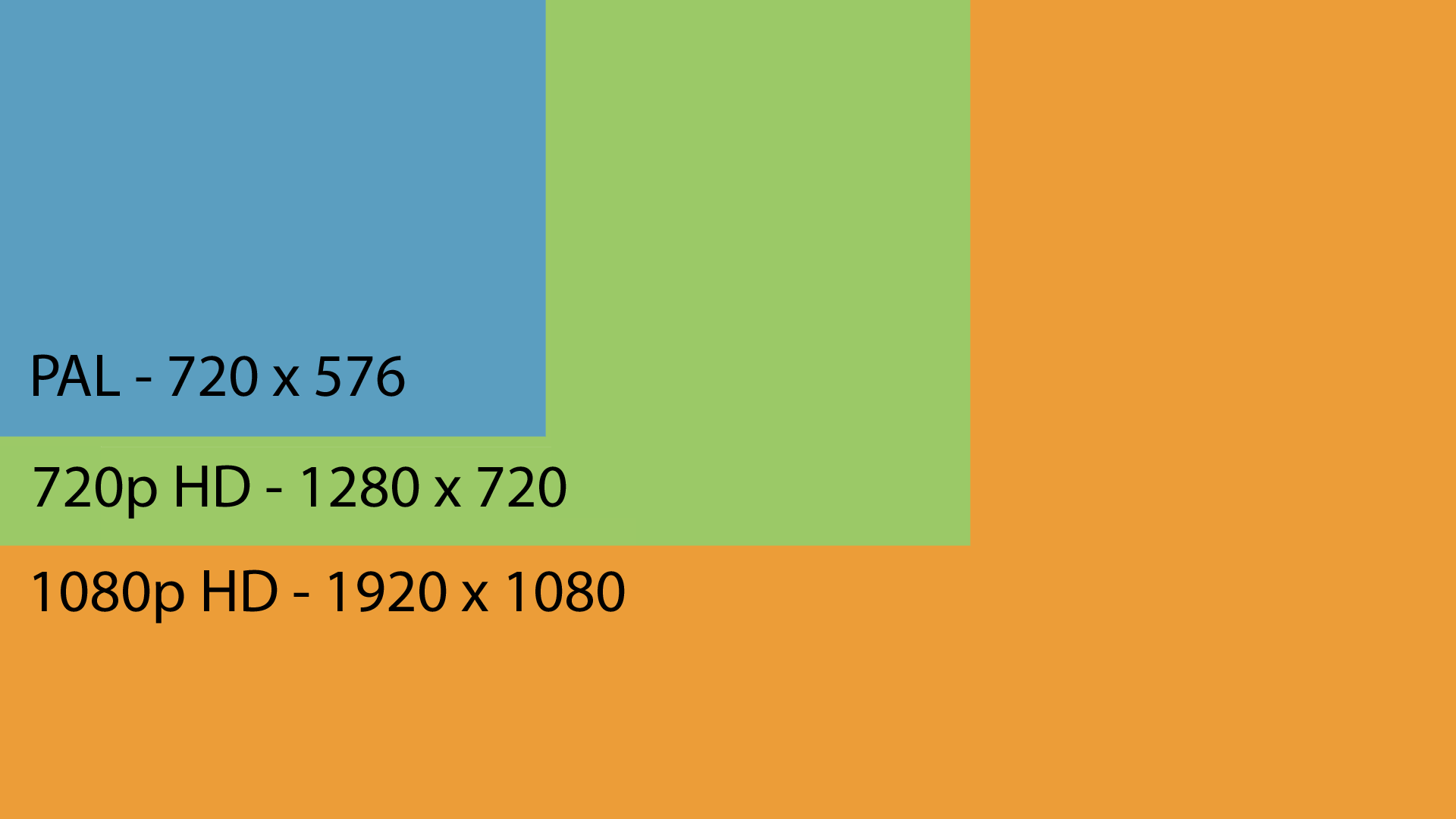

Permission

The size of an image is its "resolution". Modern widescreen displays maintain a vertical to horizontal aspect ratio 16:9 . And resolution is the ratio of the number of pixels (dots) horizontally and vertically in an image. Resolution is more often used in values HD (High Definition, a high resolution) - 1280x720 pixels, or just 720r, and FullHD - 1920x1080p, or 1080p.

Image format 1080p contains 2.25 times more pixels (the colorful dots that make up a picture) than 720r, so it is noticeably more difficult for the game to generate an image in 1080p, than in 720r. It is worth taking this into account when it is reported that a game on one console runs at 1080p, and on the other - in 720r. At the same time PS4, And Xbox One capable of producing images in 1080p in games, but now, at the very beginning of the life cycle of new consoles, there are not very many games that can run in 1080p- resolution, especially for the console Xbox One. Therefore, in order to demonstrate the visual beauty of the picture, add more details, etc., some games now run in resolutions lower than 1080p, but higher than 720p. One example: PS4-version of the game Watch Dogs works in 900rub (1600x900), A XOne-version - in 792p (1408x792).

How do developers prioritize between fps and resolution?

Studio employee Naughty Dog Cort Stratton also holds a position in the group ICE Team companies Sony. This is a group of elite programmers who create new graphics technologies that are distributed among first-party development studios.

According to the statement Stratton, resolution and frame rate per second are related. Generally, the resolution depends entirely on the job GPU(console graphics core). Here's how Stratton it explains it very simply:

- CPU (console central processing unit) sends to GPU a list of objects that the graphics chip should render, as well as technical information about what resolution it should render them in, GPU"stresses out" and begins to perform a dizzying number of calculations to determine what color each of the pixels (the dots that make up one image) should be. Therefore, by doubling the image resolution, with 720r before 1080p, we will not affect the work in any way CPU, because CPU simply sends data for processing, and does the processing GPU. But with such an increase GPU you will have to perform approximately 4 times more calculations to calculate more pixels. This affects the number of frames per second. However, it is often possible to increase the resolution (up to a certain point, of course) without affecting the frames per second.

Peter Thoman, who is known for making an impressive mod that improves the graphics in RS-version of the game Dark Souls, I agree with these statements:

- Increasing the resolution increases the load on GPU, and increasing the frame rate affects the load on CPU. So in cases where developers are limited in what they can do CPU(orfor some special reason- in possibilities GPU), you can increase the resolution without affecting the frame rate.

Stratton said that his work experience suggests that most often developers decide to focus on frame rate rather than resolution, and proceed from this priority. You can't just start making a game and wait until you reach the limits of the hardware, reaching certain heights of resolution and frame rates. All this depends on the capabilities of the hardware and game engine, the creativity of the studio’s artists, the quality of competitors’ games, and other things.

- In all the games I worked on, the desired frame rate was first determined, from which all other aspects and decisions already depended, - speaks Stratton.- Many developers decide to switch from 60 fps on 30 fps instead of cutting out certain visual effects. But usually frame rate is a line that developers don't cross.

- Personally, I prioritize high resolution over high frame rates. I don't attach special significance frame rate that is higher than 30 fps.

However, there are some peculiarities here too. For example, the higher the frame rate, the lower input lag, that is, the time (in milliseconds) during which the player’s actions (pressing a gamepad/keyboard button) are displayed on the screen (action delay).

Here are the opinions Stratton And Tomana diverge, although in some ways they are similar. Toman speaks:

- It all depends on the genre of the game. In action-based games, I don't perceive anything lower than 45 fps. I believe that gamers should definitely pay attention to resolution and frame rate because resolution makes the game look better and frame rate makes the game more responsive and playable. I'm always surprised when publishers say resolution doesn't matter. If this is true, then why do they all post advertising screenshots in 8K resolution?!.

Stratton explains his position:

- Look at two games - Uncharted: Drake's Fortune (2007) and The Last of Us (2013) - they were both made by the same development team at the Naughty Dog studio, they were made on the hardware of the same console, PS3, but there is a huge visual difference between them. I remind you that consoles PS3 for almost 10 years now. Now take a look at RS-games created 10 years ago and compare them with PS3-games released today. This is what you can achieve when you gain experience working with a specific platform whose hardware does not change. Developers are constantly learning how to work with hardware, inventing new tricks, optimizing processes in order to achieve the best possible performance on the platform on this moment. 6 months after release PS4/Xbox One it is impossible to achieve optimal 1080p/60fps, but all this will happen when over time the developers acquire the necessary experience.

Each of you has encountered a problem when games on your computer start to slow down, and the lucky person is the one who has money on hand for new hardware. Today we will try to figure out what “Frame Rate” (hereinafter referred to as FPS) can be considered sufficient, and how high a frame rate a person can distinguish. What is the “Gold Standard” and why do you need it?

Most of you understand frame rate as the number of images changed per second of a video stream.

It's simple.

What is the maximum frame rate a human can discern?

There is no such meaning, it is a myth. If you live with this myth in your head, then you are in for a big debate with yourself as you read the material below. The human eye is made up of many receptors that constantly send information to the brain. You cannot name the number of receptors or the bandwidth to the brain, so throw this myth out of your head. If such a quantity existed, it would be proven by science.Interaction between monitor and video card

To begin with, it is important to convey two simple concepts to you.Frame rate/FPS (eng. Framerate, hereinafter FPS)- the number of frames processed by your video card per second. This is an absolutely chaotic value that depends on your current tasks, video card power, scene load, general computer maintenance, etc. Over a short period of time in the same game, the frame rate can vary greatly, it can be both high and low.

We load the scene, and our FPS melts before our eyes.

Why is high FPS so important? The fact is that with a low FPS, the picture will become jerky, and we will not be able to see smooth movements or individual images.

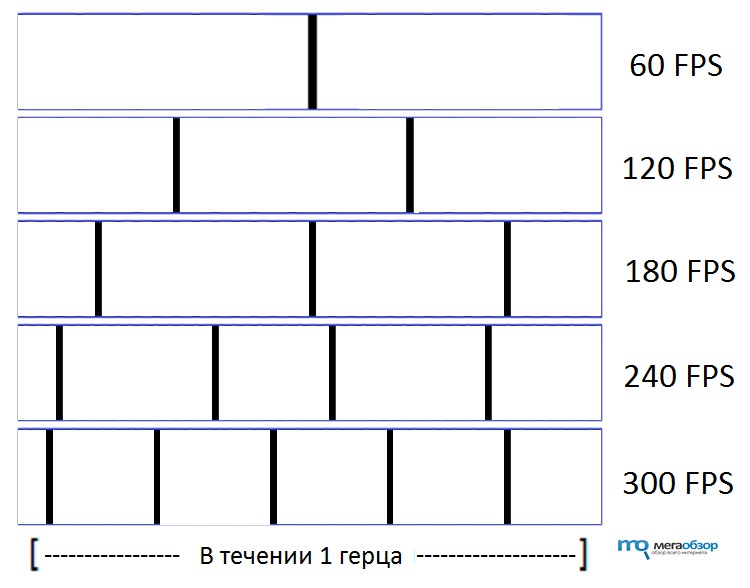

At constant FPS, you can calculate the processing time of one frame: at 30 FPS - 33 milliseconds, at 60 FPS - 16 milliseconds. We can conclude: a twofold increase in FPS requires a twofold increase in the processing speed of one frame.

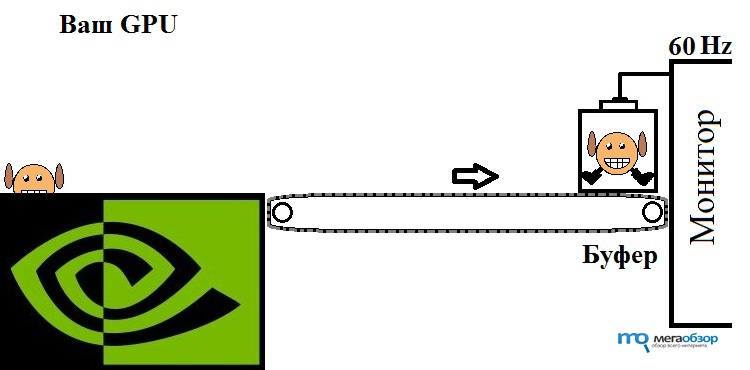

Monitor refresh rate- the frequency at which your monitor updates all its pixels. And unlike FPS, the monitor refresh rate (hereinafter referred to as “hertz”, because it’s simpler and shorter, don’t attach much meaning to the word “hertz”) is fixed, in other words, constant. If your monitor is 60 hertz, once every 1 second/60 hertz = 16.6 milliseconds the screen flickers and the frame changes. You must remember an observation from childhood, or for some from youth, when we pointed the first phones with a camera at televisions equipped with a cathode ray tube. You've seen flickering, it's the same in our LCD monitors, but we don't notice it. From this we conclude that frame rate and “hertz” are not on the same wavelength. And when the monitor changes frames, it displays what it currently has in the “buffer”. The buffer zone is the place where the monitor stores the finished frame for output (in fact, the technology may differ, but the essence is the same).

For an example of interaction, we will take a monitor with a frequency of 60 Hz.

Let's consider 3 cases

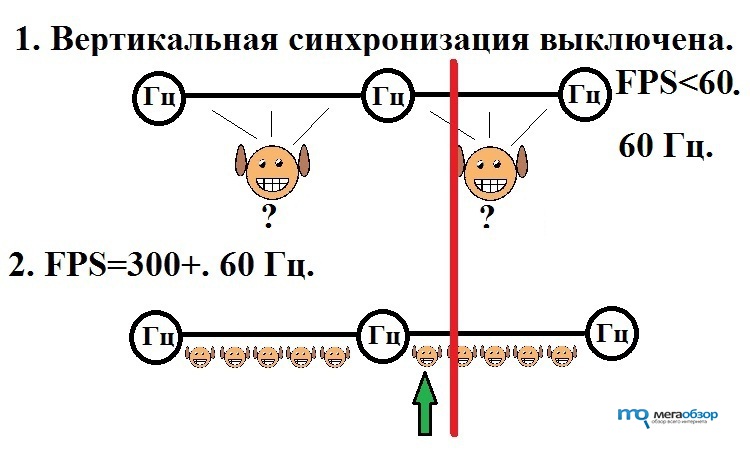

1. The average number of FPS does not exceed your monitor frequency of 60 Hz.

In the period between flickers of your monitor, the source video card sends no more than one frame to the buffer. The more FPS drops, the more often we will encounter the fact that updating the monitor does not update the frame.

After your frame is rendered, it is instantly sent with the video signal to the buffer. When the time comes, our Hertz displays the contents of the buffer on the screen.

2. The average number of FPS exceeds your monitor frequency of 60 Hz.

Here it’s more complicated, the number of FPS per one flicker of the monitor. (hereinafter referred to as the computational segment)

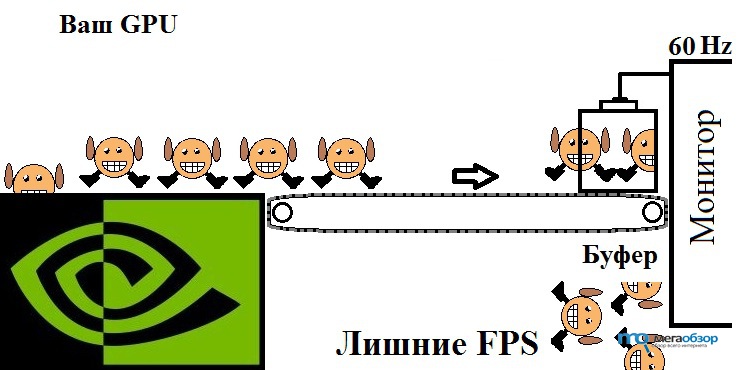

In other words, Chaos with a capital C. Your video card manages to send more than one frame per monitor flicker.

Illustrated is the case where you have 300+ FPS. During the period between updating the monitor, the source video card manages to render more than 5 frames. During this time, all these frames come into the buffer, and each new one displaces the previous one, and this previous one disappears from the digital field. Besides this, there is one very interesting point: the time has come for the monitor to update, and at the same time information about a new frame arrives in the buffer, so the monitor begins to display information from two different frames. The consequences for you are screen tearing.

How to avoid these "gaps"? There are several technologies for synchronizing frames with the monitor's refresh rate, in other words, these technologies put FPS and Hertz on the same wavelength.

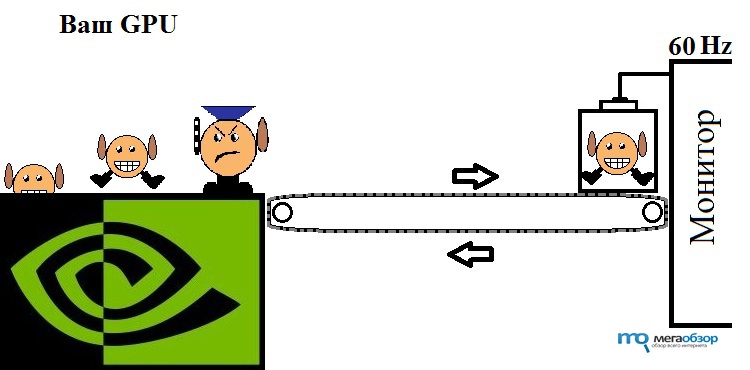

3. Vertical sync is enabled.

There is no place for chaos here. Your video card renders the frame for the monitor update.

There is a “regulator” on the video card that knows the refresh rate of the monitor and renders only 1 frame per 1 hertz.

4. G-sync.

Horizontal sync technology from NVIDIA. A chip is built into the monitor that causes the monitor to update when a new frame arrives (within its refresh rate). Everything here is too good and boring to talk about :)

Pros and cons of Vsync

- pros

- Image tearing disappears.

- The video card does not operate at full capacity, thereby lowering its temperature and reducing the noise level.

- Minuses

- Reducing the frame rate to the monitor frequency. But you can’t seem to see more frames on a 60 Hz monitor?

- Increase the responsiveness of all your actions in the game. Why? I'll try to explain this to you.

- Your “controller” on the video card, like its other elements, consumes computing resources. This means there are fewer frames left for processing.

- Before turning on vertical sync, make sure you have some FPS headroom. If the video card cannot handle the required FPS, it will lower it to the next multiple. In our case - 30 FPS, and no one wants to play with 30 FPS, unless you are a “console player”, more on them later.

Does it make sense to have 75, 90, 120 FPS in games on a 60 Hz monitor?

You just read about the interaction between the monitor and the video card, and, most likely, you decided for yourself, turn on this “your synchronization” and I won’t have any troubles. And there are some subtleties here.Have you met people who told you that they see little difference between 60 and 120 FPS, and even see it on a 60 Hz monitor? Yes, they lost their minds. Or not? If you are close to the player and watch how he plays, you will not see the difference. But everything changes if you are the player who interacts with the game world.

We have three hertz in front of us. Between them there are 2 computational segments, in one of which an event occurred 12 milliseconds after the monitor was updated.

The red line is a game “tick” (moment), and it doesn’t matter which one. It could be the first shot of a grenade exploding, you can turn the camera with one tick and the light will come on. It doesn't matter at all!

As we remember, the monitor is updated every 1000 milliseconds/60 = 16.66 milliseconds. In the first case, we do not know whether the frame had time to render when our “tick” had already occurred.

But in the second case, we clearly see that the last rendered frame appeared after the "tick", so it contains information about it. And in 16.66/5 ≈ 3.33 milliseconds we will see our “tick” on the monitor. At the same time, in the first case, the frame skips a “tick”, and we will see it only on the next render, namely after 16.66+(16.66-12) = 21.13 milliseconds.

The combination of “ticks” creates the difference between 60 and 120 FPS on a 60 Hz monitor. It is impossible to explain or show this difference on video; you need to experience it yourself.

We ignored all other responses and delays associated with computer hardware, from mouse response to video signal speed, because it doesn't matter. The essence does not change from this.

I also ignored the case with vertical sync enabled, because it is the worst, because... The “adjuster” renders and sends the frame just before the monitor is updated, the delay of each “tick” will be up to 32 milliseconds, and this is the frame delay as at 30 FPS, I hope you can see the flaws at 30 FPS. This is the “second disadvantage” of vertical synchronization that I listed above; it is very easy to feel if you turn on/off synchronization directly in the game.

A visual demonstration of the gameplay, in which a lot of our “ticks” occur, namely camera rotations. If you move the camera in a similar way in CS:GO at 60 and 120 FPS on a 60 Hz monitor, you still won’t understand the difference. Then try not to think about it, it's not your thing :)

"Gold standard"

Let's start with the fact that there is no "gold standard". There are the requirements of players on the one hand, which in turn may vary, and the technical capabilities of developers on the other. If developers had the opportunity to release all projects with millions of FPS, they would not miss it. Still, we will try to determine some comfort zone and smooth image.Let's look at a few cases.

Xbox One and PS4

At the time of development of these consoles, the choice of graphics system fell on a close analogue of the Radeon HD 7850. Try to take this HD 7850 and play something in modern games. There are games that will drop below 30 FPS. What do developers do in this case? Reduce the calculated resolution. Take any latest Assassin's Creed, both consoles operate in 900p 30 fps mode, this is still in the best case, it is not difficult to find a test on Youtube when the consoles cannot support 30 FPS. Can 30 FPS be called the Gold Standard? No! This the bottom, below which there is nowhere to fall.

As long as they are busy, the situation is unlikely to change. The main thing is that consoles do not cost $1000.

VR

VR runs at 90+ frame rates, which puts the screen as close to your eyes as possible, and low FPS will be more noticeable, leading to fatigue and poor health.

Let's get back to the monitors. The smoothness of the image is achieved when we do not see the transition from one frame to another. Unfortunately, here we return again to the fact that there are so many people, so many different opinions.

In this article, my goal was to explain to you the advantage of 60+ FPS on a 60Hz monitor.

I’ll leave some recommendations for video cards for gaming on FullHD monitors. Below you can see the tests of these video cards on our website.30 FPS

It greatly exceeds the power of both consoles, so while they are playing on the consoles, you will not be left idle.

60 FPS this year

shows excellent results in games at a compromise price.

60 FPS in future experiments from Ubisoft :)

has excellent performance and is much better in price compared to its “big brother”.